Request Deduplication

In general, when we make a request and don’t get a response, there are two possi- bilities.

In one case, the request was never received by the API server, so of course we haven’t received a reply.

In the other, the request was received but the response never made it back to the client.

When this happens with idempotent methods (e.g., methods that don’t change anything, such as a standard get method), it’s really not a big deal. If we don’t get a response, we can always just try again.

But what if the request is not idempotent?

The main goal of this pattern: to define a mechanism by which we can prevent duplicate requests, specifically for non-idempotent methods, across an API.

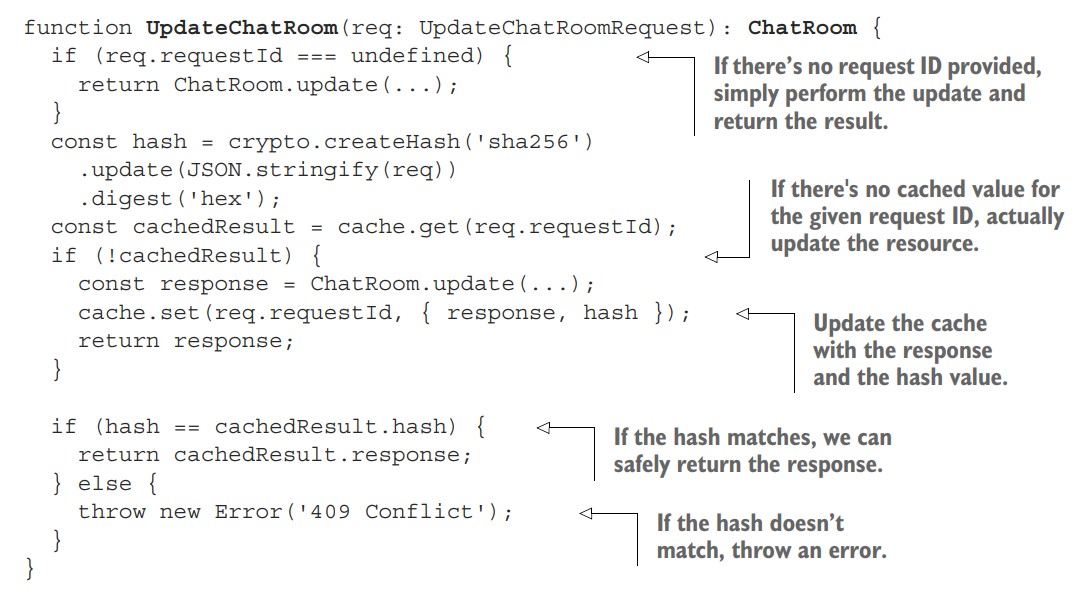

This pattern explores the idea of providing a unique identifier to each request that we want to ensure is serviced once and only once. Using this identifier, we can clearly see whether a current incoming request was already handled by the API service, and if so, can avoid acting on it again.

This leads to a tricky question: what should we return as the result when a duplicate request is caught?

-

An error does prevent duplication, but it's not quite what we're looking for in a result.

-

To handle this, we can cache the response message that went along with the request ID provided that when a duplicate is found, we can return the response as it would have been returned when the request was first made.

Implementation

API definition

abstract class ChatRoomApi {

@post("/chatRooms")

CreateChatRoom(req: CreateChatRoomRequest): ChatRoom;

}

interface CreateChatRoomRequest {

resource: ChatRoom;

requestId?: string;

}

Exercises

- Why is it a bad idea to use a fingerprint (e.g., a hash) of a request to determine whether it's a duplicate?

| We cannot guarantee that we won’t actually need to execute the same request twice. |

- Why would it be a bad idea to attempt to keep the request-response cache up-to-date? Should cached responses be invalidated or updated as the underlying resources change over time?

| It would lead to even more confusion than the stale data. |

| It should not. The primary goal of this pattern is to allow clients to retry requests without causing potentially dangerous duplication of work. And a critical part of that goal is to ensure that the client receives the same result in response that they would have if everything worked correctly the first time. |

- What would happen if the caching system responsible for checking duplicates were to go down? What is the failure scenario? How should this be protected against?

| All requests would be refered as unique. |

| Make the caching system highly available. |

- Why is it important to use a fingerprint of the request if you already have a request ID? What attribute of request ID generation leads to this requirement?

| Ensure that the request itself hasn’t changed since the last time we saw that same identifier. |

| Collision |

Summary

-

Requests might fail at any time, and therefore unless we have a confirmed response from the API, there's no way to be sure whether the request was processed.

-

Request identifiers are generated by the API client and act as a way to deduplicate individual requests seen by the API service.

-

For most requests, it's possible to cache the response given a request identifier, though the expiration and invalidation parameters must be thoughtfully chosen.

-

API services should also check a fingerprint of the content of the request along with the request ID in order to avoid unusual behavior in the case of request ID collisions.

- In this pattern, we use a simple unique identifier to avoid duplicating work in our APIs. This is ultimately a trade-off between permitting safe retries of non-idempotent requests and API method complexity (and some additional memory requirements for our caching requirements).